Autonomous drones can help search and rescue after disasters

Drones already help with search and rescue, but teaching machines to identify victims on their own could free up human rescuers to do other crucial work.

When disasters happen – whether a natural disaster like a flood or earthquake, or a human-caused one like a mass shooting or bombing – it can be extremely dangerous to send first responders in, even though there are people who badly need help.

Drones are useful, and are helping in the recovery after the deadly Alabama tornadoes, but most require individual pilots, who fly the unmanned aircraft by remote control. That limits how quickly rescuers can view an entire affected area, and can delay actual aid from reaching victims.

Autonomous drones could cover more ground more quickly, but would only be more effective if they were able on their own to help rescuers identify people in need. At the University of Dayton Vision Lab, we are working on developing systems that can help spot people or animals – especially ones who might be trapped by fallen debris. Our technology mimics the behavior of a human rescuer, looking briefly at wide areas and quickly choosing specific regions to focus in on, to examine more closely.

Looking for an object in a chaotic scene

Disaster areas are often cluttered with downed trees, collapsed buildings, torn-up roads and other disarray that can make spotting victims in need of rescue very difficult.

My research team has developed an artificial neural network system that can run in a computer onboard a drone. This system can emulate some of the excellent ways human vision works. It analyzes images captured by the drone’s camera and communicates notable findings to human supervisors.

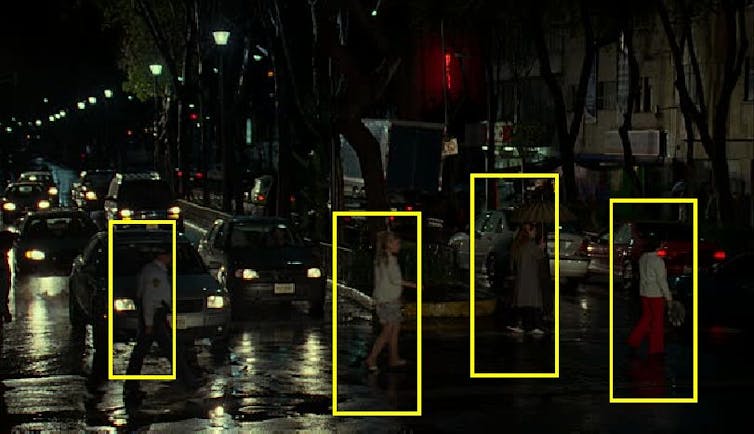

First, our system processes the images to improve their clarity. Just as humans squint their eyes to adjust their focus, our technologies take detailed estimates of darker regions in a scene and computationally lighten the images. When images are too hazy or foggy, the system recognizes they’re too bright and reduces the whiteness of the image to see the actual scene more clearly.

In a rainy environment, human brains use a brilliant strategy to see clearly. By noticing the parts of a scene that don’t change – and the ones that do, as the raindrops fall – people can see reasonably well despite rain. Our technology uses the same strategy, continuously investigating the contents of each location in a sequence of images to get clear information about the objects in that location.

We also have developed technology that can make images from a drone-borne camera larger, brighter and clearer. By expanding the size of the image, both algorithms and people can see key features more clearly.

Confirming objects of interest

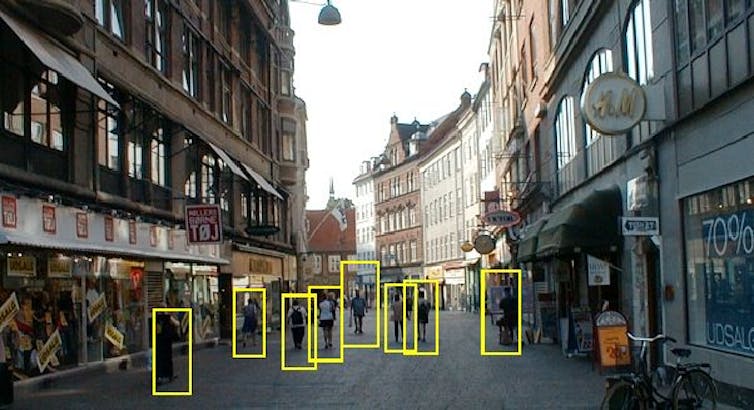

Our system can identify people in various positions, such as lying prone or curled in the fetal position, even from different viewing angles and in varying lighting conditions.

The human brain can look at one view of an object and envision how it would look from other angles. When police issue an alert asking the public to look for someone, they often include a still photo – knowing that viewers’ minds will imagine three-dimensional views of how that person might look, and recognize them on the street, even if they don’t get the exact same view as the photo offered. We employ this strategy by computing three-dimensional models of people – either general human shapes or more detailed projections of specific people. Those models are used to match similarities when a person appears in a scene.

We have also developed a way to detect parts of an object, without seeing the whole thing. Our system can be trained to detect and locate a leg sticking out from under rubble, a hand waving at a distance, or a head popping up above a pile of wooden blocks. It can tell a person or animal apart from a tree, bush or vehicle.

Putting the pieces together

During its initial scan of the landscape, our system mimics the approach of an airborne spotter, examining the ground to find possible objects of interest or regions worth further examination, and then looking more closely. For example, an aircraft pilot who is looking for a truck on the ground would typically pay less attention to lakes, ponds, farm fields and playgrounds – because trucks are less likely to be in those areas. Our autonomous technology employs the same strategy to focus the search area to the most significant regions in the scene.

Then our system investigates each selected region to obtain information about the shape, structure and texture of objects there. When it detects a set of features that matches a human being or part of a human, it flags that as a location of a victim.

The drone also collects GPS data about its location, and senses how far it is from other objects it’s photographing. That information lets the system calculate exactly the location of each person needing assistance, and alert rescuers.

All of this process – capturing an image, processing it for maximum visibility and analyzing it to identify people who might be trapped or concealed – takes about one-fifth of a second on the normal laptop computer that the drone carries, along with its high-resolution camera.

The U.S. military is interested in this technology. We have worked with the U.S. Army Medical Research and Materiel Command to find wounded individuals in a battlefield who need rescue. We have adapted this work to serve utility companies searching for intrusions on pipeline paths by construction equipment or vehicles that may damage the pipelines. Utility companies are also interested in detecting any new constructions of buildings near the pipeline pathways. All of these groups – and many more – are interested in technology that can see as humans can see, especially in places humans can’t be.

Vijayan Asari is affiliated with University of Dayton, Dayton, Ohio, USA. Dr. Vijayan Asari is a Fellow of SPIE (Society of Photo-Optical Instrumentation Engineers) and a Senior Member of IEEE (Institute of Electrical and Electronics Engineers). He is a member of the IEEE Computational Intelligence Society (CIS), IEEE Internet of Things (IoT) Community, Society for Imaging Science and Technology (IS&T), and member of the Institute for Systems and Technologies of Information, Control and Communication (INSTICC). Dr. Asari is a co-organizer of several SPIE and IEEE conferences and workshops. Dr. Asari advises graduate and undergraduate research students in Vision Lab at the University of Dayton. Dr. Asari does not receive any funding for this specific research project. He uses internal funding for the human detection in complex environment research activity. Dr. Asari did receive funding from various organizations for several research activities that are linked to this research project. He received funding from the US Army Night Vision and Electronic Sensors Directorate (NVESD) for long range human detection in infrared imagery, from the US Army Medical Research and Materiel Command (USAMRMC) for detection of wounded individuals in war field (Research for Casualty Care and Management), from the Air Force Research Laboratory (AFRL) for object detection and tracking in wide area motion imagery, from Pacific Gas & Electric Company (PG&E) for automatic building change detection in satellite imagery, and from the Pipeline Research Council International (PRCI) for intrusion detection on pipeline right-of-ways.

Read These Next

Crowdfunded generosity isn’t taxable – but IRS regulations haven’t kept up with the growth of mutual

Some Americans are discovering that monetary help they received from friends, neighbors or even strangers…

Michelangelo hated painting the Sistine Chapel – and never aspired to be a painter to begin with

A red chalk sketch for the Sistine ceiling fetched an eye-popping sum at auction, reflecting the artist’s…

Meekness isn’t weakness – once considered positive, it’s one of the ‘undersung virtues’ that deserve

The word ‘meekness’ might seem old-fashioned – and not a positive trait. But understanding its…