How humans fit into Google’s machine future

Google controls what billions of people find, see, know or even are aware of. As it gets better at delivering what it thinks people want, how will that affect humans' perceptions of their own needs?

In 1998, Google began humbly, formally incorporated in a Menlo Park garage, providing search results from a server housed in Lego bricks. It had a straightforward goal: make the poorly indexed World Wide Web accessible to humans. Its success was based on an algorithm that analyzed the linking structure of the internet itself to evaluate what web pages are most reputable and useful. But founders Sergey Brin and Larry Page had a much more ambitious goal: They wanted to organize the world’s information.

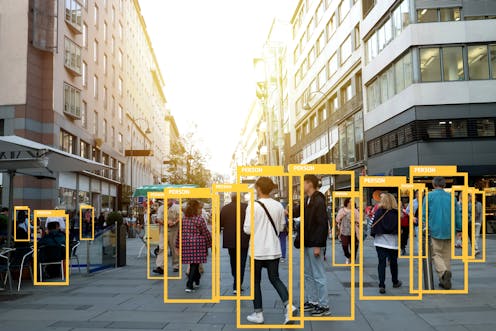

Twenty years later, they have built a company going far beyond even that lofty goal, providing individuals and businesses alike with email, file sharing, web hosting, home automation, smartphones and countless other services. The playful startup that began as a surveyor of the web has become an architect of reality, creating and defining what its billions of users find, see, know or are even aware of.

Google controls more than 90 percent of the global search market, driving users and companies alike to design websites that appeal to the company’s algorithms. If Google can’t find a piece of information, that knowledge simply doesn’t exist for Google users. If it’s not on Google, does it really exist at all?

The intimacy machine

Despite its billions of answered search queries, Google is not just an answer machine. Google monitors what responses people click on, assuming those are more relevant and of higher value, and returning them more prominently in future searches on that topic. The company also monitors user activities on its email, business applications, music and mobile operating systems, using that data as part of a feedback loop to give users more of what they like.

All the data it collects is the real source of Google’s dominance, making the company’s services ever better at providing users what they want. Through autocomplete and the personalized filtering of search results, Google tries to anticipate your needs, sometimes before you even have them. As Google’s former executive chairman Eric Schmidt once put it, “I actually think most people don’t want Google to answer their questions. They want Google to tell them what they should be doing next.”

Twenty years from now, with two more decades of progress, Google will be even more accomplished, perhaps approaching a vision Brin expressed years ago: “The perfect search engine would be like the mind of God.” People are coming to rely on these tools, with their advanced artificial intelligence-based algorithms, not just to know things but to help them think.

The search bar has already become a place people ask personal questions, a kind of confessional or stream of consciousness that is deeply revealing about who users are, what they believe and what they want. In the future, Google will know you even more intimately, combining search results, browsing history and location tracking with biophysical health data from wearables and other sources that could offer powerful insights into your state of mind.

A new kind of vulnerability

It is not far-fetched to imagine that, in the future, Google might know if an individual is depressed, or has cancer, before that user realizes it for herself. But even beyond that, Google may have the crucial role in an ever-tightening alignment between what you think your needs are, and what Google tells you they are.

Beyond its effects on individual people, Google is amassing power to influence society – perhaps invisibly. Fiction has a warning about what that might look like: In the movie “Ex Machina,” an entrepreneurial genius reveals how he assembled the raw material of billions of search queries into an artificial mind that is highly effective at manipulating humans based on what it learns about people’s behaviors and biases.

But this situation isn’t really fiction. As long ago as 2014, researchers at Facebook infamously demonstrated how easy it is to manipulate users with positive or negative posts in their news feeds. As people hand algorithms more power over their daily lives, will they notice how the machines are steering them?

Surviving the glorious future

Whether Google ultimately exercises this power depends on its human leaders – and on the digital society Google is so central to building. The company is investing heavily in machine intelligence, committing itself to a highly automated future where the mechanics and, perhaps, the true insights of the quest for knowledge become difficult or impossible for humans to understand.

Google is gradually becoming an extension of individual and collective thought. It will get harder to recognize where people end and Google begins. People will become both empowered by and dependent on the technology – which will be easy for anyone to access but hard for people to control.

Humans will need to find ways to collaborate with – and direct the activities of – increasingly sophisticated machine intelligence, rather than merely becoming users who blindly follow the leads of black boxes they no longer understand or control.

Based on our studies of the complex relationships between people and technologies, a critical key to this new understanding of algorithms will be storytelling. The human brain is bad at understanding and processing data – which is, of course, a machine’s core strength. To work together, a new human-machine relationship will have to depend on a uniquely human strength – storytelling. People will work best with systems that can work through stories and explain their actions in ways humans can understand and modify.

The more that people entrust computer-based systems with organizing culture and society, the more they should demand those systems function according to rules humans can comprehend. The day we stop being the primary authors of the story of humankind is the day it stops being a story about us.

Ed Finn anticipates receiving funding from Google in Fall 2018 to co-sponsor a research project funded by the Hewlett Foundation exploring the relationship between science fiction, artificial intelligence, and technology policy. He owns five shares of Alphabet Inc. Class A stock, not counting some modest and almost inscrutable quantity of partial shares through the managed investments in his Arizona State University retirement account.

Andrew Maynard does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

Read These Next

Crowdfunded generosity isn’t taxable – but IRS regulations haven’t kept up with the growth of mutual

Some Americans are discovering that monetary help they received from friends, neighbors or even strangers…

What is Bluetooth and how does it work?

Did you know that your wireless earbuds contain a tiny radio transmitter?

Picky eating starts in the womb – a nutritional neuroscientist explains how to expand your child’s p

While genes do influence some food preferences, positive experiences can help make new tastes easier…