Why you stink at fact-checking

Cognitive psychologists know the way our minds work means we not only don't notice errors and misinformation we know are wrong, we also then remember them as true.

Here’s a quick quiz for you:

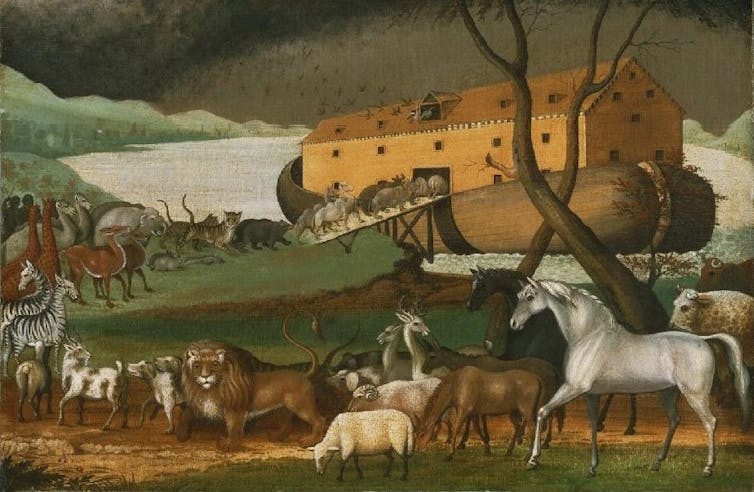

- In the biblical story, what was Jonah swallowed by?

- How many animals of each kind did Moses take on the Ark?

Did you answer “whale” to the first question and “two” to the second? Most people do … even though they’re well aware that it was Noah, not Moses who built the ark in the biblical story.

Psychologists like me call this phenomenon the Moses Illusion. It’s just one example of how people are very bad at picking up on factual errors in the world around them. Even when people know the correct information, they often fail to notice errors and will even go on to use that incorrect information in other situations.

Research from cognitive psychology shows that people are naturally poor fact-checkers and it is very difficult for us to compare things we read or hear to what we already know about a topic. In what’s been called an era of “fake news,” this reality has important implications for how people consume journalism, social media and other public information.

Failing to notice what you know is wrong

The Moses Illusion has been studied repeatedly since the 1980s. It occurs with a variety of questions and the key finding is that – even though people know the correct information – they don’t notice the error and proceed to answer the question.

In the original study, 80 percent of the participants failed to notice the error in the question despite later correctly answering the question “Who was it that took the animals on the Ark?” This failure occurred even though participants were warned that some of the questions would have something wrong with them and were given an example of an incorrect question.

The Moses Illusion demonstrates what psychologists call knowledge neglect – people have relevant knowledge, but they fail to use it.

One way my colleagues and I have studied this knowledge neglect is by having people read fictional stories that contain true and false information about the world. For example, one story is about a character’s summer job at a planetarium. Some information in the story is correct: “Lucky me, I had to wear some huge old space suit. I don’t know if I was supposed to be anyone in particular – maybe I was supposed to be Neil Armstrong, the first man on the moon.” Other information is incorrect: “First I had to go through all the regular astronomical facts, starting with how our solar system works, that Saturn is the largest planet, etc.”

Later, we give participants a trivia test with some new questions (Which precious gem is red?) and some questions that relate to the information from the story (What is the largest planet in the solar system?). We reliably find positive effects of reading the correct information within the story – participants are more likely to answer “Who was the first person to step foot on the moon?” correctly. We also see negative effects of reading the misinformation – participants are both less likely to recall that Jupiter is the largest planet and they are more likely to answer with Saturn.

These negative effects of reading false information occur even when the incorrect information directly contradicts people’s prior knowledge. In one study, my colleagues and I had people take a trivia test two weeks before reading the stories. Thus, we knew what information each person did and did not know. Participants still learned false information from the stories they later read. In fact, they were equally likely to pick up false information from the stories when it did and did not contradict their prior knowledge.

Can you improve at noticing incorrect info?

So people often fail to notice errors in what they read and will use those errors in later situations. But what can we do to prevent this influence of misinformation?

Expertise or greater knowledge seems to help, but it doesn’t solve the problem. Even biology graduate students will attempt to answer distorted questions such as “Water contains two atoms of helium and how many atoms of oxygen?” – though they are less likely to answer them than history graduate students. (The pattern reverses for history-related questions.)

Many of the interventions my colleagues and I have implemented to try to reduce people’s reliance on the misinformation have failed or even backfired. One initial thought was that participants would be more likely to notice the errors if they had more time to process the information. So, we presented the stories in a book-on-tape format and slowed down the presentation rate. But instead of using the extra time to detect and avoid the errors, participants were even more likely to produce the misinformation from the stories on a later trivia test.

Next, we tried highlighting the critical information in a red font. We told readers to pay particular attention to the information presented in red with the hope that paying special attention to the incorrect information would help them notice and avoid the errors. Instead, they paid additional attention to the errors and were thus more likely to repeat them on the later test.

The one thing that does seem to help is to act like a professional fact-checker. When participants are instructed to edit the story and highlight any inaccurate statements, they are less likely to learn misinformation from the story. Similar results occur when participants read the stories sentence by sentence and decide whether each sentence contains an error.

It’s important to note that even these “fact-checking” readers miss many of the errors and still learn false information from the stories. For example, in the sentence-by-sentence detection task participants caught about 30 percent of the errors. But given their prior knowledge they should have been able to detect at least 70 percent. So this type of careful reading does help, but readers still miss many errors and will use them on a later test.

Quirks of psychology make us miss mistakes

Why are human beings so bad at noticing errors and misinformation? Psychologists believe that there are at least two forces at work.

First, people have a general bias to believe that things are true. (After all, most things that we read or hear are true.) In fact, there’s some evidence that we initially process all statements as true and that it then takes cognitive effort to mentally mark them as false.

Second, people tend to accept information as long as it’s close enough to the correct information. Natural speech often includes errors, pauses and repeats. (“She was wearing a blue – um, I mean, a black, a black dress.”) One idea is that to maintain conversations we need to go with the flow – accept information that is “good enough” and just move on.

And people don’t fall for these illusions when the incorrect information is obviously wrong. For example, people don’t try and answer the question “How many animals of each kind did Nixon take on the Ark?” and people don’t believe that Pluto is the largest planet after reading it in a fictional story.

Detecting and correcting false information is difficult work and requires fighting against the ways our brains like to process information. Critical thinking alone won’t save us. Our psychological quirks put us at risk of falling for misinformation, disinformation and propaganda. Professional fact-checkers provide an essential service in hunting out incorrect information in the public view. As such, they are one of our best hopes for zeroing in on errors and correcting them, before the rest of us read or hear the false information and incorporate it into what we know of the world.

Lisa Fazio receives funding from the Rita Allen Foundation.

Read These Next

Meekness isn’t weakness – once considered positive, it’s one of the ‘undersung virtues’ that deserve

The word ‘meekness’ might seem old-fashioned – and not a positive trait. But understanding its…

Supreme Court rules against Trump’s emergency tariffs – but leaves key questions unanswered

The ruling strikes down most of the Trump administration’s current tariffs, with more limited options…

Menstrual pads and tampons can contain toxic substances – here’s what to know about this emerging he

Heavy metals, phthalates and other potentially harmful chemicals have been detected in a range of menstrual…