Grammys’ AI rules aim to keep music human, but large gray area leaves questions about authenticity a

AI is already in much of the music you hear. It can be as mundane as a production tool or as deceptive as a fake recording artist – and a whole lot in between.

At its best, artificial intelligence can assist people in analyzing data, automating tasks and developing solutions to big problems: fighting cancer, hunger, poverty and climate change. At its worst, AI can assist people in exploiting other humans, damaging the environment, taking away jobs and eventually making ourselves lazy and less innovative.

Likewise, AI is both a boon and a bane for the music industry. As a recording engineer and professor of music technology and production, I see a large gray area in between.

The National Academy of Recording Arts and Sciences has taken steps to address AI in recognizing contributions and protecting creators. Specifically, the academy says, only humans are eligible for a Grammy Award: “A work that contains no human authorship is not eligible in any categories.”

The academy says that the human component must be meaningful and significant to the work submitted for consideration. Right now, that means that it’s OK for me to use what’s marketed as an AI feature in a software product to standardize volume levels or organize a large group of files in my sample library. These tools help me to work faster in my digital audio workstation.

However, it is not OK in terms of Grammy consideration for me to use an AI music service to generate a song that combines the style of say, a popular male folk country artist – someone like Tyler Childers – and say, a popular female eclectic pop artist – someone like Lady Gaga – singing a duet about “Star Trek.”

The gray zone

It gets trickier when you go deeper.

There is quite a bit of gray area between generating a song with text prompts and using a tool to organize your data. Is it OK by National Academy of Recording Arts and Sciences Grammy standards to use an AI music generator to add backing vocals to a song I wrote and recorded with humans? Almost certainly. The same holds true if someone uses a feature in a digital audio workstation to add variety and “swing” to a drum pattern while producing a song.

What about using an AI tool to generate a melody and lyrics that become the hook of the song? Right now, a musician or nonmusician could use an AI tool to generate a chorus for a song with the following information:

“Write an eight measure hook for a pop song that is in the key of G major and 120 beats per minute. The hook should consist of a catchy melody and lyrics that are memorable and easily repeatable. The topic shall be on the triumph of the human spirit in the face of adversity.”

If I take what an AI tool generates based on that prompt, write a couple of verses and bridge to fit with it, then have humans play the whole thing, is that still a meaningful and significant human contribution?

The performance most certainly is, but what about the writing of the song? If AI generates the catchy part first, does that mean it is ultimately responsible for the other sections created by a human? Is the human who is feeding those prompts making a meaningful contribution to the creation of the music you end up hearing?

AI music is here

The Recording Academy is doing its best right now to recognize and address these challenges with technology that is evolving so quickly.

Not so long ago, pitch correction software like Auto-Tune caused quite a bit of controversy in music. Now, the use of Auto-Tune, Melodyne and other pitch correction software is heard in almost every genre of music – and no barrier to winning a Grammy.

Maybe the average music listener won’t bat an eye in 10 years when they discover AI had been used to create a song they love. There are already folks listening to AI-generated music by choice today.

You are almost certainly encountering AI-generated articles (no, not this one). You are probably seeing a lot of AI slop if you are an avid social media consumer.

The truth is you might already be listening to AI-generated music, too. Some major streaming services, like Spotify, aren’t doing much to identify or limit AI-generated music on their platforms.

On Spotify, an AI “artist” by the name of Aventhis currently has over 1 million monthly listeners and no disclosure that it is AI-generated. YouTube comments on the Aventhis song, “Mercy on My Grave,” suggest that the majority of commenters believe a human wrote it. This leads to questions about why this information is not disclosed by Spotify or YouTube aside from “[h]arnessing the creative power of AI as part of his artistic process” in the description of the artist.

AI can not only be used to create a song, but AI bots can be used to generate clicks and listens for it, too. This raises the possibility that the streaming services’ recommendation algorithms are being trained to push this music to human subscribers. For the record, Spotify and most streaming services say they don’t support this practice.

Trying to keep it real

If you feel that AI in music hurts human creators and makes the world less-than-a-better place, you have options for avoiding it. Determining whether a song is AI-written is possible though not foolproof. You can also find services that aim to limit AI in music.

Bandcamp recently set out guidelines for AI music on its platform that are like the Recording Academy’s and more friendly to music creators. As of January 2026, Bandcamp does not allow music “that is generated wholly or in substantial part by AI.” Regardless of your opinion of AI-generated music, Bandcamp’s approach gives artists and listeners a platform where human creation is central to the experience.

Ideally, Spotify and the other streaming platforms would provide clear disclaimers and offer listeners filters to customize their use of the services based on AI content. In the meantime, AI in music is likely to have a large gray area between acceptable tools and questionable practices.

Mark Benincosa does not work for, consult, own shares in or receive funding from any company or organization that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

Read These Next

Drug company ads are easy to blame for misleading patients and raising costs, but research shows the

Officials and policymakers say direct-to-consumer drug advertising encourages patients to seek treatments…

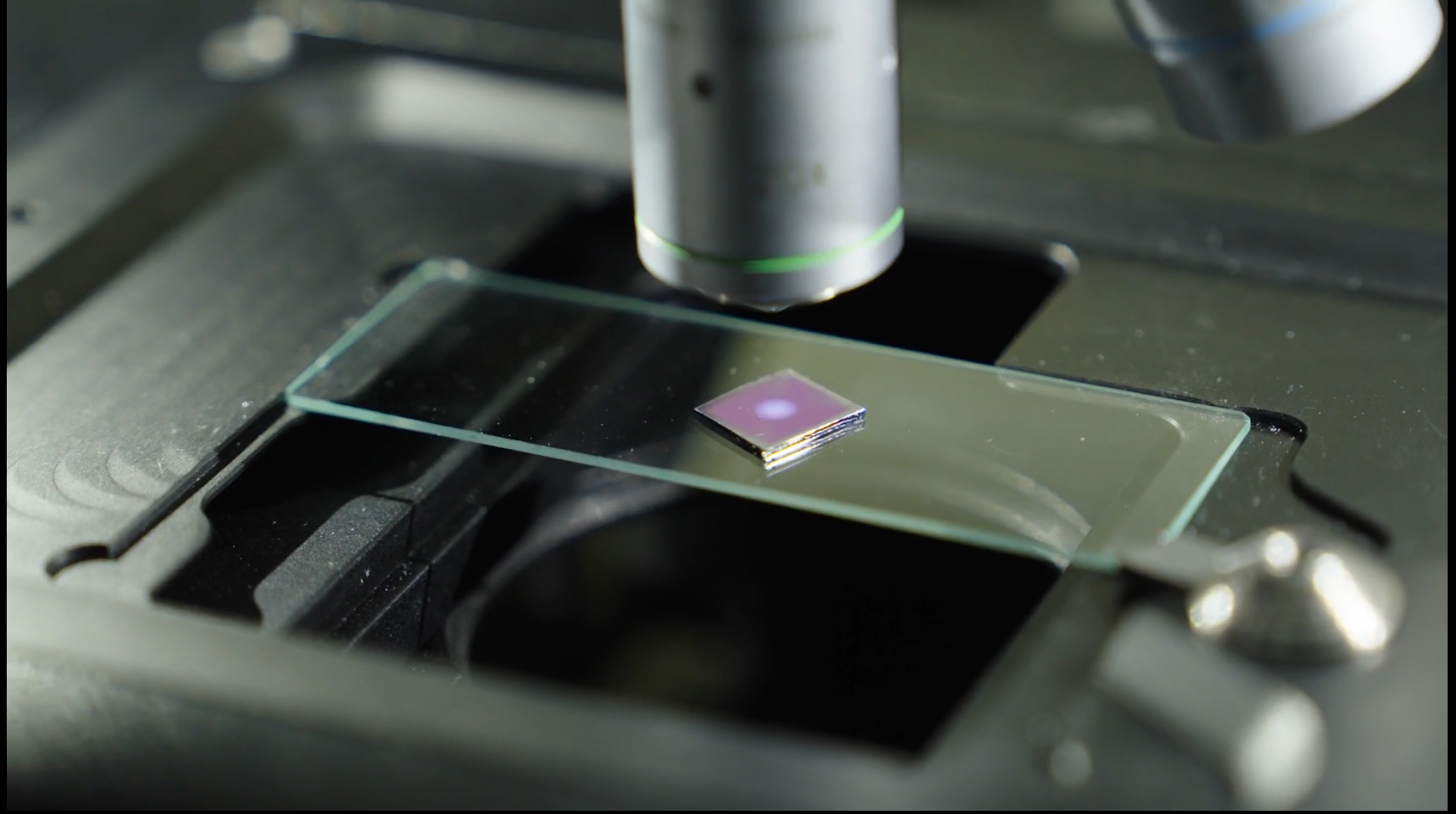

Nanoparticles and artificial intelligence can help researchers detect pollutants in water, soil and

Tiny particles bounce light around in a unique way, a property that researchers are using to detect…

Tiny recording backpacks reveal bats’ surprising hunting strategy

By listening in on their nightly hunts, scientists discovered that small, fringe-lipped bats are unexpectedly…