AI is about to radically alter military command structures that haven’t changed much since Napoleon’

Today’s military commands struggle to manage battlefields that span cyberspace and outer space, as well as land, sea and air. AI is poised to help by breaking them out of their centuries-old structures.

Despite two centuries of evolution, the structure of a modern military staff would be recognizable to Napoleon. At the same time, military organizations have struggled to incorporate new technologies as they adapt to new domains – air, space and information – in modern war.

The sizes of military headquarters have grown to accommodate the expanded information flows and decision points of these new facets of warfare. The result is diminishing marginal returns and a coordination nightmare – too many cooks in the kitchen – that risks jeopardizing mission command.

AI agents – autonomous, goal-oriented software powered by large language models – can automate routine staff tasks, compress decision timelines and enable smaller, more resilient command posts. They can shrink the staff while also making it more effective.

As an international relations scholar and reserve officer in the U.S. Army who studies military strategy, I see both the opportunity afforded by the technology and the acute need for change.

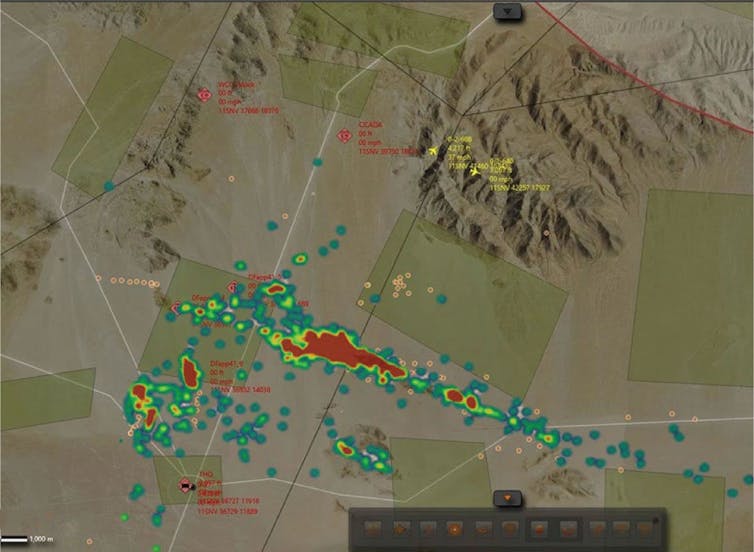

That need stems from the reality that today’s command structures still mirror Napoleon’s field headquarters in both form and function – industrial-age architectures built for massed armies. Over time, these staffs have ballooned in size, making coordination cumbersome. They also result in sprawling command posts that modern precision artillery, missiles and drones can target effectively and electronic warfare can readily disrupt.

Russia’s so-called “Graveyard of Command Posts” in Ukraine vividly illustrates how static headquarters where opponents can mass precision artillery, missiles and drones become liabilities on a modern battlefield.

The role of AI agents

Military planners now see a world in which AI agents – autonomous, goal-oriented software that can perceive, decide and act on their own initiative – are mature enough to deploy in command systems. These agents promise to automate the fusion of multiple sources of intelligence, threat-modeling, and even limited decision cycles in support of a commander’s goals. There is still a human in the loop, but the humans will be able to issue commands faster and receive more timely and contextual updates from the battlefield.

These AI agents can parse doctrinal manuals, draft operational plans and generate courses of action, which helps accelerate the tempo of military operations. Experiments – including efforts I ran at Marine Corps University – have demonstrated how even basic large language models can accelerate staff estimates and inject creative, data-driven options into the planning process. These efforts point to the end of traditional staff roles.

There will still be people – war is a human endeavor – and ethics will still factor into streams of algorithms making decisions. But the people who remain deployed are likely to gain the ability to navigate mass volumes of information with the help of AI agents.

These teams are likely to be smaller than modern staffs. AI agents will allow teams to manage multiple planning groups simultaneously.

For example, they will be able to use more dynamic red teaming techniques – role-playing the opposition – and vary key assumptions to create a wider menu of options than traditional plans. The time saved not having to build PowerPoint slides and updating staff estimates will be shifted to contingency analysis – asking “what if” questions – and building operational assessment frameworks – conceptual maps of how a plan is likely to play out in a particular situation – that provide more flexibility to commanders.

Designing the next military staff

To explore the optimal design of this AI agent-augmented staff, I led a team of researchers at the bipartisan think tank Center for Strategic & International Studies’ Futures Lab to explore alternatives. The team developed three baseline scenarios reflecting what most military analysts are seeing as the key operational problems in modern great power competition: joint blockades, firepower strikes and joint island campaigns. Joint refers to an action coordinated among multiple branches of a military.

In the example of China and Taiwan, joint blockades describe how China could isolate the island nation and either starve it or set conditions for an invasion. Firepower strikes describe how Beijing could fire salvos of missiles – similar to what Russia is doing in Ukraine – to destroy key military centers and even critical infrastructure. Last, in Chinese doctrine, a Joint Island Landing Campaign describes the cross-strait invasion their military has spent decades refining.

Any AI agent-augmented staff should be able to manage warfighting functions across these three operational scenarios.

The research team found that the best model kept humans in the loop and focused on feedback loops. This approach – called the Adaptive Staff Model and based on pioneering work by sociologist Andrew Abbott – embeds AI agents within continuous human-machine feedback loops, drawing on doctrine, history and real-time data to evolve plans on the fly.

In this model, military planning is ongoing and never complete, and focused more on generating a menu of options for the commander to consider, refine and enact. The research team tested the approach with multiple AI models and found that it outperformed alternatives in each case.

AI agents are not without risk. First, they can be overly generalized, if not biased. Foundation models – AI models trained on extremely large datasets and adaptable to a wide range of tasks – know more about pop culture than war and require refinement. This makes it important to benchmark agents to understand their strengths and limitations.

Second, absent training in AI fundamentals and advanced analytical reasoning, many users tend to use models as a substitute for critical thinking. No smart model can make up for a dumb, or worse, lazy user.

Seizing the ‘agentic’ moment

To take advantage of AI agents, the U.S. military will need to institutionalize building and adapting agents, include adaptive agents in war games, and overhaul doctrine and training to account for human-machine teams. This will require a number of changes.

First, the military will need to invest in additional computational power to build the infrastructure required to run AI agents across military formations. Second, they will need to develop additional cybersecurity measures and conduct stress tests to ensure the agent-augmented staff isn’t vulnerable when attacked across multiple domains, including cyberspace and the electromagnetic spectrum.

Third, and most important, the military will need to dramatically change how it educates its officers. Officers will have to learn how AI agents work, including how to build them, and start using the classroom as a lab to develop new approaches to the age-old art of military command and decision-making. This could include revamping some military schools to focus on AI, a concept floated in the White House’s AI Action Plan released on July 23, 2025.

Absent these reforms, the military is likely to remain stuck in the Napoleonic staff trap: adding more people to solve ever more complex problems.

Benjamin Jensen led a research project that was a collaboration between CSIS and Scale AI. He did not personally receive any funding from the company.

Read These Next

The cost of casting animals as heroes and villains in conservation science

New research shows how these storytelling choices can distort science – and how to move beyond them.

How protecting wilderness could mean purposefully tending it, not just leaving it alone

For decades, wilderness lands have been left largely unaltered by human activity. But those places are…

Pittsburgh nurses are fighting for better staffing ratios — and the research backs them up

As nurses in Pittsburgh and nationwide spotlight staffing shortages, better pay and workplace safety,…