Scientific norms shape the behavior of researchers working for the greater good

While rarely explicitly taught to scientists in training, a set of common values guides science in the quest to advance knowledge while being ethical and trustworthy.

Over the past 400 years or so, a set of mostly unwritten guidelines has evolved for how science should be properly done. The assumption in the research community is that science advances most effectively when scientists conduct themselves in certain ways.

The first person to write down these attitudes and behaviors was Robert Merton, in 1942. The founder of the sociology of science laid out what he called the “ethos of science,” a set of “values and norms which is held to be binding on the man of science.” (Yes, it’s sexist wording. Yes, it was the 1940s.) These now are referred to as scientific norms.

The point of these norms is that scientists should behave in ways that improve the collective advancement of knowledge. If you’re a cynic, you might be rolling your eyes at such a Pollyannaish ideal. But corny expectations keep the world functioning. Think: Be kind, clean up your mess, return the shopping cart to the cart corral.

I’m a physical geographer who realized long ago that students are taught biology in biology classes and chemistry in chemistry classes, but rarely are they taught about the overarching concepts of science itself. So I wrote a book called “The Scientific Endeavor,” laying out what scientists and other educated people should know about science itself.

Scientists in training are expected to learn the big picture of science after years of observing their mentors, but that doesn’t always happen. And understanding what drives scientists can help nonscientists better understand research findings. These scientific norms are a big part of the scientific endeavor. Here are Merton’s original four, along with a couple I think are worth adding to the list:

Universalism

Scientific knowledge is for everyone – it’s universal – and not the domain of an individual or group. In other words, a scientific claim must be judged on its merits, not the person making it. Characteristics like a scientist’s nationality, gender or favorite sports team should not affect how their work is judged.

Also, the past record of a scientist shouldn’t influence how you judge whatever claim they’re currently making. For instance, Nobel Prize-winning chemist Linus Pauling was not able to convince most scientists that large doses of vitamin C are medically beneficial; his evidence didn’t sufficiently support his claim.

In practice, it’s hard to judge contradictory claims fairly when they come from a “big name” in the field versus an unknown researcher without a reputation. It is, however, easy to point out such breaches of universalism when others let scientific fame sway their opinion one way or another about new work.

Communism

Communism in science is the idea that scientific knowledge is the property of everyone and must be shared.

Jonas Salk, who led the research that resulted in the polio vaccine, provides a classic example of this scientific norm. He published the work and did not patent the vaccine so that it could be freely produced at low cost.

When scientific research doesn’t have direct commercial application, communism is easy to practice. When money is involved, however, things get complicated. Many scientists work for corporations, and they might not publish their findings in order to keep them away from competitors. The same goes for military research and cybersecurity, where publishing findings could help the bad guys.

Disinterestedness

Disinterestedness refers to the expectation that scientists pursue their work mainly for the advancement of knowledge, not to advance an agenda or get rich. The expectation is that a researcher will share the results of their work, regardless of a finding’s implications for their career or economic bottom line.

Research on politically hot topics, like vaccine safety, is where it can be tricky to remain disinterested. Imagine a scientist who is strongly pro-vaccine. If their vaccine research results suggest serious danger to children, the scientist is still obligated to share these findings.

Likewise, if a scientist has invested in a company selling a drug, and the scientist’s research shows that the drug is dangerous, they are morally compelled to publish the work even if that would hurt their income.

In addition, when publishing research, scientists are required to disclose any conflicts of interest related to the work. This step informs others that they may want to be more skeptical in evaluating the work, in case self-interest won out over disinterest.

Disinterestedness also applies to journal editors, who are obligated to decide whether to publish research based on the science, not the political or economic implications.

Organized skepticism

Merton’s last norm is organized skepticism. Skepticism does not mean rejecting ideas because you don’t like them. To be skeptical in science is to be highly critical and look for weaknesses in a piece of research.

This concept is formalized in the peer review process. When a scientist submits an article to a journal, the editor sends it to two or three scientists familiar with the topic and methods used. They read it carefully and point out any problems they find.

The editor then uses the reviewer reports to decide whether to accept as is, reject outright or request revisions. If the decision is revise, the author then makes each change or tries to convince the editor that the reviewer is wrong.

Peer review is not perfect and doesn’t always catch bad research, but in most cases it improves the work, and science benefits. Traditionally, results weren’t made public until after peer review, but that practice has weakened in recent years with the rise of preprints, reducing the reliability of information for nonscientists.

Integrity and humility

I’m adding two norms to Merton’s list.

The first is integrity. It’s so fundamental to good science that it almost seems unnecessary to mention. But I think it’s justified since cheating, stealing and lazy scientists are getting plenty of attention these days.

The second is humility. You may have made a contribution to our understanding of cell division, but don’t tell us that you cured cancer. You may be a leader in quantum mechanics research, but that doesn’t make you an authority on climate change.

Scientific norms are guidelines for how scientists are expected to behave. A researcher who violates one of these norms won’t be carted off to jail or fined an exorbitant fee. But when a norm is not followed, scientists must be prepared to justify their reasons, both to themselves and to others.

Jeffrey A. Lee does not work for, consult, own shares in or receive funding from any company or organization that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

Read These Next

Trump says climate change doesn’t endanger public health – evidence shows it does, from extreme heat

Climate change is making people sicker and more vulnerable to disease. Erasing the federal endangerment…

FDA rejects Moderna’s mRNA flu vaccine application - for reasons with no basis in the law

The move signals an escalation in the agency’s efforts to interfere with established procedures for…

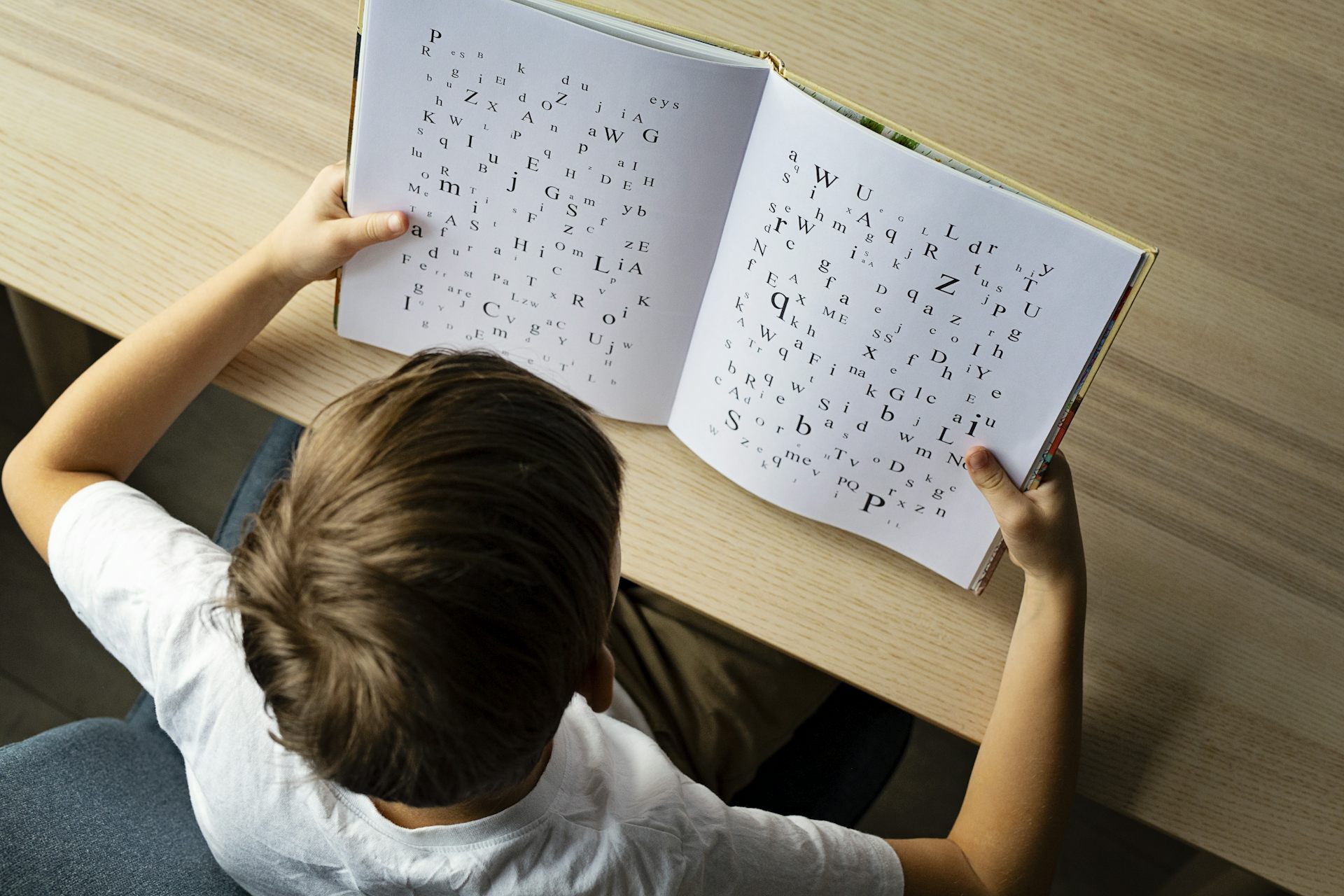

Nearly every state in the US has dyslexia laws – but our research shows limited change for strugglin

Dyslexia laws are now nearly universal across the US. But the data shows that passing a law is not the…