How man and machine can work together to diagnose diseases in medical scans

With artificial intelligence, machines can now examine thousands of medical images for signs of disease. Will this technology replace doctors – or work side by side with them?

With artificial intelligence, machines can now examine thousands of medical images – and billions of pixels within these images – to identify patterns too subtle for a radiologist or pathologist to identify.

The machine then uses this information to identify the presence of a disease or estimate its aggressiveness, likelihood of survival or potential response to treatment.

We are engineers at the Center for Computational Imaging and Personalized Diagnostics. Our team works with physicians and statisticians to develop and validate these kinds of tools.

Many worry that this technology aims to replace doctors. But we believe the technology will work in tandem with humans, making them more efficient and helping with decisions on complicated cases.

Machine learning and medical images

In one study, researchers at Stanford showed that machines were just as accurate as trained dermatologists in distinguishing skin cancers from benign lesions in 100 test images.

In another, computer scientists at Google used an AI approach called deep learning to accurately identify which patients had diabetic retinopathy – a constellation of changes in the retina due to diabetes – from high-resolution photographs of the retina. Another deep learning project at Google successfully predicted cardiovascular disease risk from retina images.

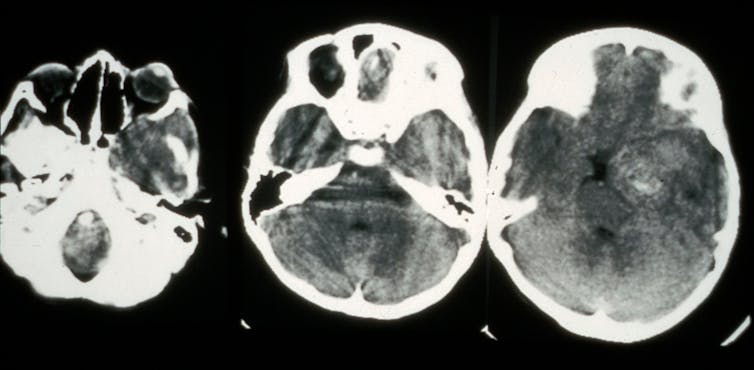

Our group has been developing new ways to identify disease in scans like MRI and CT, as well as digitized tissue slide images.

In biopsied images of heart tissue from 105 patients with heart disease, our algorithms predicted with high accuracy which patients would go on to have heart failure.

In another study involving MRI scans from prostate cancer patients, our computer algorithms identified clinically significant disease in more than 70 percent of cases where radiologists missed it. In half of the cases where radiologists mistakenly thought that the patient had aggressive prostate cancer, the machine was able to correctly identify that no clinically significant disease was present.

Predicting outcomes and treatment response

Our team has also been developing approaches to predict a patient’s response to specific therapies and monitor early treatments.

Take the case of immunotherapy. Immunotherapy drugs boost the body’s own immune defenses to fight against the cancer. They have shown tremendous promise in comparison to traditional chemotherapy, but certain caveats limit their widespread use. Only about 1 in 5 patients with lung cancer might actually respond to immunotherapy regimens. Additionally, these therapies cost upwards of US$200,000 per patient per year and can have toxic effects on the immune system. Physicians need a way to determine accurately which patients might benefit.

To identify the likelihood of a successful response before therapy begins, our lab is building software to examine routine diagnostic CT scans of lung tumors. The software looks at tumor texture, intensity and shape, as well as the shape of vessels feeding the nodules. This information might help oncologists optimize the therapy dosage or alter a patient’s treatment plan.

There are many cancers and other diseases where computational tools to predict disease aggressiveness or treatment response could aid physicians. For example, a study published this June compared women with breast cancer who had been treated with adjuvant chemotherapy or standard endocrine therapy. For about 70 percent of the patients, the chemotherapy had no demonstrated benefit compared to the standard approach. Preventing unnecessary and often deleterious chemotherapy thus becomes a key issue for doctors. However, currently, the only way to predict outcome depends upon expensive genomic tests that destroy tissue.

We are currently working on a new way to interrogate digitized tissue images from biopsies of the breast. Our new project, starting this year, will test the technology on women with breast cancers at Tata Memorial Hospital in Mumbai, India.

Making it possible

There is a massive opportunity for clinicians, radiologists and pathologists to enrich their decisions with artificial intelligence. That’s particularly true when it comes to building treatment plans tailored to the individual patient.

Before such technology can be used in hospitals, researchers like ourselves need to do further tests to ensure it’s reliable and valid. This can be done by carrying out tests at multiple medical institutions.

It’s also important for physicians to be able to interpret the technology. They’re unlikely to adopt technology that cannot be explained by existing biology research. For example, our lung tumor software looks at vessel shape because studies show that the degree of convolutedness of the vessels feeding the tumor can negatively affect drug delivery.

That’s why it’s crucial artificial intelligence researchers like ourselves engage clinicians early in the development process as equal collaborators.

Dr. Anant Madabhushi is an equity holder in Elucid Bioimaging and in Inspirata Inc. He is also a scientific advisory consultant for Inspirata Inc. In addition, he currently serves as a scientific advisory board member for Inspirata Inc. and for Astrazeneca. He also has sponsored research agreements with Philips and Inspirata Inc. His technology has been licensed to Elucid Bioimaging and Inspirata Inc. He is also involved in a NIH U24 grant with PathCore Inc. and a R01 with Inspirata Inc.

Kaustav Bera does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

Read These Next

Billions of dollars, decades of progress spent eliminating devastating diseases may be lost with und

Public health campaigns had made significant strides toward eradicating diseases like elephantitis and…

2025 was hotter than it should have been – 5 influences and a dirty surprise offer clues to what’s a

Solar cycles, sea ice and rising electricity use all play a role. So does an unhealthy surprise that…

We designed an AI tutor that helps college students reason rather than give them answers

A 2025 study shows that an AI-based tutor improves learning when it prompts reasoning and is paired…