Remote education is rife with threats to student privacy

Has technology gone too far to keep students honest during exams? A scholar on privacy and technology weighs in.

An online “proctor” who can survey a student’s home and manipulate the mouse on their computer as the student takes an exam. A remote-learning platform that takes face scans and voiceprints of students. Virtual classrooms where strangers can pop up out of the blue and see who’s in class.

These three unnerving scenarios are not hypothetical. Rather, they stand as stark, real-life examples of how remote learning during the pandemic – both at the K-12 and college level – has become riddled with threats to students’ privacy.

As a scholar of privacy, I believe all the electronic eyes watching students these days have created privacy concerns that merit more attention.

Which is why, increasingly, you will see aggrieved students, parents and digital privacy advocates seeking to hold schools and technology platforms accountable for running afoul of student privacy law.

Concerns and criticisms

For instance, the American Civil Liberties Union of Massachusetts has accused that state of lacking sufficient measures to protect the privacy of school and college students.

Students are taking measures to force universities to stop the use of invasive software such as proctoring apps, which some schools and colleges use to make sure students don’t cheat on exams. They have filed numerous petitions asking administrators and teachers to end the use of these apps. In a letter to the California Supreme Court, the Electronic Frontier Foundation, an international nonprofit that defends digital rights, wrote that the use of remote-proctoring technologies is basically the same as spying.

A series of security breaches serves to illustrate why students and privacy advocates are fighting against online proctor apps.

For instance, in July 2020, online proctoring service ProctorU suffered a cyberbreach in which sensitive personal information for 444,000 students – including their names, email address, home addresses, phone numbers and passwords – was leaked. This data then became available in online hacker forums. Cybercriminals may use such information to launch phishing attacks to steal people’s identities and falsely obtain loans using their names.

A public petition filed by students at Washington State University has expressed concerns about ProctorU’s weak security practices. The petition had over 1,900 signatures as of Nov. 5.

Students compelled to share sensitive data

Some online proctoring companies have engaged in activities that violate students’ privacy. The online proctoring software Proctorio’s CEO, for example, violated a student’s privacy by posting the student’s chats on the social news forum Reddit.

To use online proctoring apps, students are required to provide full access to their devices including all personal files. They are also asked to turn on their computer’s video camera and microphone. Some national advocacy groups of parents, teachers and community members argue that requiring students to turn on their cameras with rooms in the background during virtual classes or exams for a stranger to watch would violate their civil rights.

Fair information practices, a set of principles established by the International Association of Privacy Professionals, require that information be collected by fair means. Online proctoring apps use methods that can cause anxiety and stress among many students and are thus unfair.

When students are forced to disclose sensitive information against their wishes, it can harm them psychologically. Some students also experience physical symptoms due to stress and anxiety. One student literally vomited due to the stress from a statistics exam. She did so at her desk at home because no bathroom breaks were permitted.

Poor technology performance

These privacy-invasive proctoring tools rely on artificial intelligence, which affect certain groups more adversely.

For instance, these programs may flag body-focused repetitive behaviors such as trichotillomania, chronic tic disorder and other health disorders, as cheating.

Artificial intelligence also performs poorly in identifying the faces of students who are ethnic minorities or darker-skinned individuals. In some cases, such students go through extra hassles. They may also need to contact the technical support team to resolve the problem and hence get less than allotted time to complete the exam.

One student who experienced this snafu blamed the situation on “racist technology.”

Lawsuits and regulatory concerns

Providers of remote learning and technology solutions and schools are facing several lawsuits and regulatory actions.

For example, an Illinois parent has sued Google. The lawsuit alleged that Google’s G Suite for Education apps illegally collected children’s biometric data, such as facial scans and voiceprints, which are a human voice’s measurable characteristics that identify an individual. Such practices violate the Illinois Biometric Information Privacy Act.

In April, a group of California parents filed a federal lawsuit against G Suite on similar grounds.

In some cases, officials have taken action to reduce the adverse privacy effects posed by remote learning and technology solutions that had weak security. For instance, New York’s Department of Education banned video communications app Zoom due to privacy and other concerns. Many instances were reported in which Zoom’s weak cybersecurity failed to prevent a form of harassment known as “Zoombombing,” in which intruders could gain access to virtual classrooms.

In such situations, schools face two major problems. First, video, audio and chat sessions in Zoom recordings have personally identifiable information such as faces, voices and names. These education records are thus subject to the Family Educational Rights and Privacy Act, which is meant to protect the privacy of student education records.

Such information should not be accessed by anyone who is not in the class. When teachers cannot prevent unintended participants from joining a virtual class, there is a violation of the Family Educational Rights and Privacy Act.

What K-12 schools and universities can do

The increasing scrutiny of and criticism for privacy-invasive software, which resembles spyware, may require schools and universities to reconsider their use. One option could be to go for open-note, open-book exams that do not require proctoring.

[Deep knowledge, daily. Sign up for The Conversation’s newsletter.]

In general, artificial intelligence is not developed well. For instance, in order to ensure that artificial intelligence algorithms can accurately predict cheating in exams, they may need to be trained with millions of pictures and videos of student cheating. This has not yet happened in most areas including remote learning. The artificial intelligence industry has been described as being at an infant stage of development. Even simpler algorithms such as facial recognition applications have been mainly trained to identify white males and, consequently, misidentify ethnic minorities. Thus, I don’t believe this technology is currently appropriate for remote proctoring.

Nir Kshetri does not work for, consult, own shares in or receive funding from any company or organization that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

Read These Next

Counter-drone technologies are evolving – but there’s no surefire way to defend against drone attack

Companies are selling a range of anti-drone devices, from guns that fire nets to powerful laser weapons,…

Trump says climate change doesn’t endanger public health – evidence shows it does, from extreme heat

Climate change is making people sicker and more vulnerable to disease. Erasing the federal endangerment…

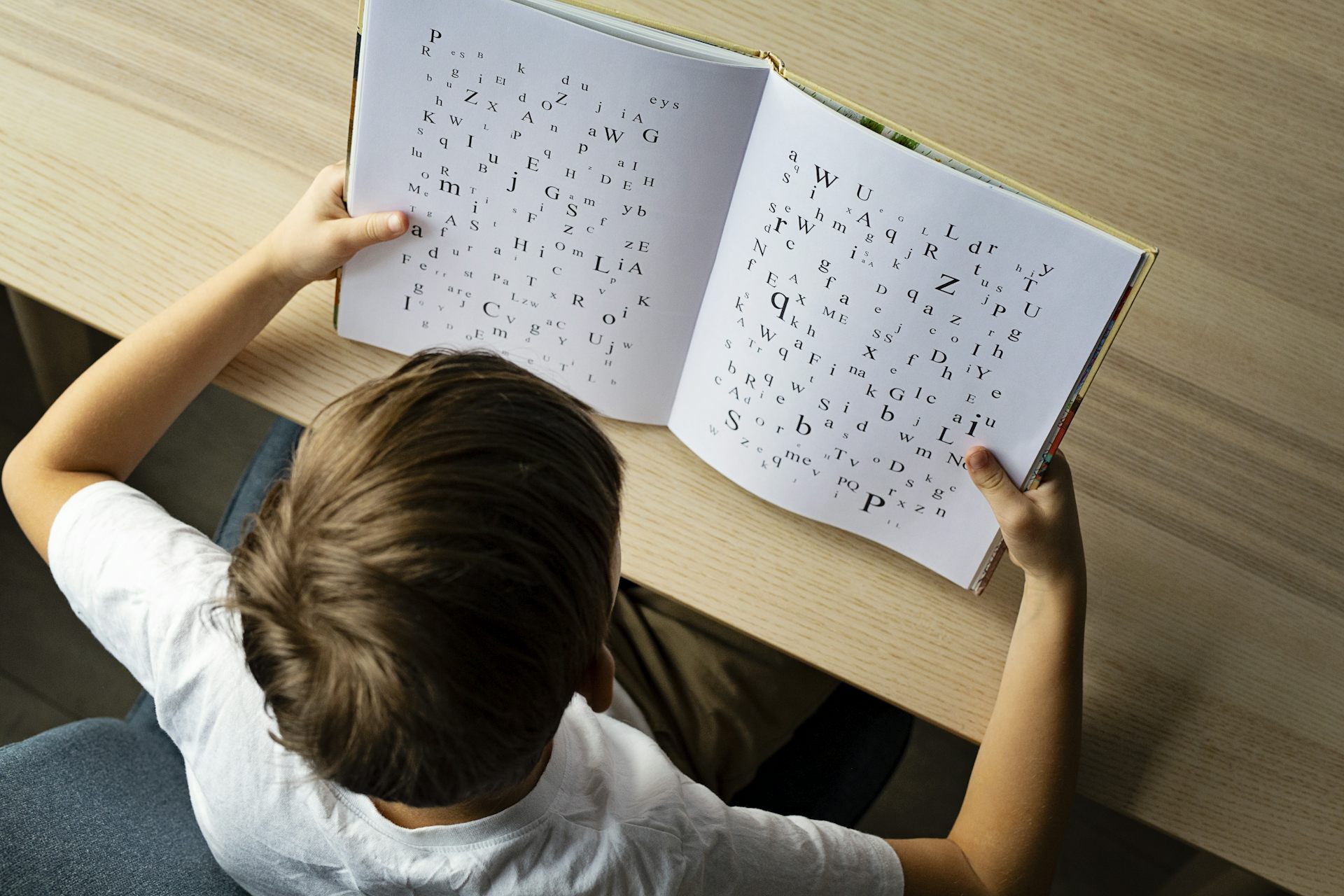

Nearly every state in the US has dyslexia laws – but our research shows limited change for strugglin

Dyslexia laws are now nearly universal across the US. But the data shows that passing a law is not the…